Deadlock

Deadlock is more serious than indefinite postponement or starvation because it affects more than one job. Because resources are being tied up, the entire system (not just a few programs) is affected. There’s no simple and immediate solution to a deadlock; no one can move forward until someone moves out of the way, but no one can move out of the way until either someone advances or everyone behind moves back. Obviously, it requires outside intervention. Only then can the deadlock be resolved. Deadlocks became prevalent with the introduction of interactive systems, which generally improve the use of resources through dynamic resource sharing, but this capability also increases the possibility of deadlocks. In some computer systems, deadlocks are regarded as a mere inconvenience that causes delays. But for real-time systems, deadlocks cause critical situations. For example, a deadlock in a hospital’s life support system or in the guidance system aboard an aircraft could endanger lives. Regardless of the environment, the operating system must either prevent deadlocks or resolve them when they happen. (In Chapter 12, we learn how to calculate system reliability and availability, which can be affected by processor conflicts.)

Seven Cases of Deadlock or Livelock

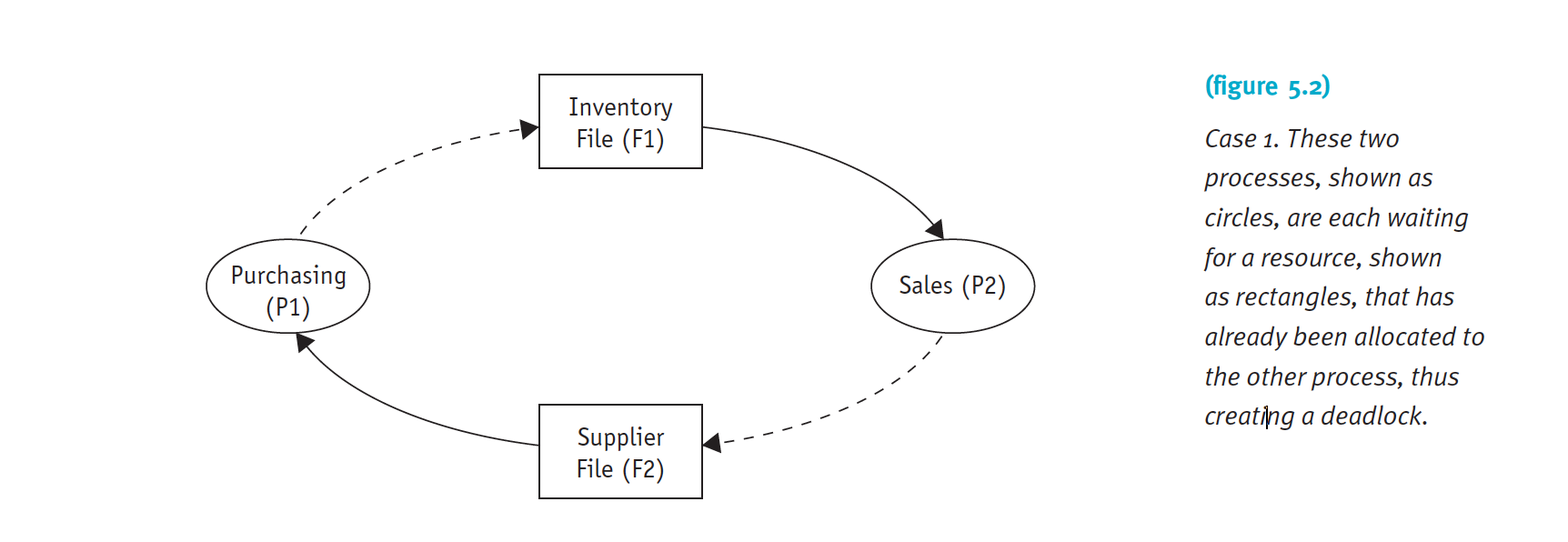

A deadlock usually occurs when non-sharable, non-preemptable resources, such as files, printers, or scanners, are allocated to jobs that eventually require other non-sharable, nonpreemptive resources—resources that have been locked by other jobs. However, deadlocks aren’t restricted to files, printers, and scanners. They can also occur on sharable resources that are locked, such as disks and databases. Directed graphs visually represent the system’s resources and processes and show how they are deadlocked. Using a series of squares for resources, circles for processes, and connectors with arrows for requests, directed graphs can be manipulated to understand how deadlocks occur.

Case 1: Deadlocks on File Requests

If jobs are allowed to request and hold files for the duration of their execution, a deadlock can occur, as the simplified directed graph shown in Figure 5.2 illustrates.

For example, consider the case of a home construction company with two application programs, Purchasing and Sales, which are active at the same time. Both need to access two separate files, called Inventory and Suppliers, to read and write transactions. One day the system deadlocks when the following sequence of events takes place:

- The Purchasing process accesses the Suppliers file to place an order for more lumber.

- The Sales process accesses the Inventory file to reserve the parts that will be required to build the home ordered that day.

- The Purchasing process doesn’t release the Suppliers file, but it requests the Inventory file so it can verify the quantity of lumber on hand before placing its order for more. However, Purchasing is blocked because the Inventory file is being held by Sales.

- Meanwhile, the Sales process doesn’t release the Inventory file (because it needs it), but requests the Suppliers file to check the schedule of a subcontractor. At this point, the Sales process is also blocked because the Suppliers file is already being held by the Purchasing process. In the meantime, any other programs that require the Inventory or Suppliers files will be put on hold as long as this situation continues. This deadlock will remain until one of the two programs is closed or forcibly removed and its file is released. Only then can the other program continue and the system return to normal.

Case 2: Deadlocks in Databases

A deadlock can also occur if two processes access and lock records in a database. To appreciate the following scenario, remember that database queries and transactions are often relatively brief processes that either search or modify parts of a database. Requests usually arrive at random and may be interleaved arbitrarily. Database locking is a technique used to guarantee the integrity of the data through which the user locks out all other users while working with the database. Locking can be done at three different levels: the entire database can be locked for the duration of the request; a subsection of the database can be locked; or only the individual record can be locked. Locking the entire database (the most extreme and most successful solution) prevents a deadlock from occurring, but it restricts access to the database to one user at a time and, in a multiuser environment, response times are significantly slowed; this is normally an unacceptable solution. When the locking is performed on only one part of the database, access time is improved, but the possibility of a deadlock is increased because different processes sometimes need to work with several parts of the database at the same time. Here’s a system that locks each record in the database when it is accessed and keeps it locked until that process is completed. Let’s assume there are two processes (The sales process and address process), each of which needs to update a different record (the final exam record and the current address record), and the following sequence leads to a deadlock:

- The sales process accesses the first quarter record and locks it.

- The address process accesses the current address record and locks it.

- The sales process requests the current address record, which is locked by the address process.

- The address process requests the first quarter record, which is locked by the sales process. If locks are not used to preserve database integrity, the resulting records in the database might include only some of the data—and their contents would depend on the

order in which each process finishes its execution. Known as a race between processes, this is illustrated in the example shown in Figure 5.3. (Leaving all database records unlocked is an alternative, but that leads to other difficulties.) Let’s say you are a student of a university that maintains most of its files on a database that can be accessed by several different programs, including one for grades and another listing home addresses. You’ve just moved at the end of fall term, so you send the university a change of address form. It happens to be shortly after grades are submitted. One fateful day, both programs race to access a single record in the database:

- The grades process (P1) is the first to access your college record (R1), and it copies the record to its own work area.

- Almost simultaneously, the address process (P2) accesses the same record (R1) and copies it to its own work area. Now the same record is in three different places: the database, the work area for grade changes, and the work area for address changes.

- The grades process changes your college record by entering your grades for the fall term and calculating your new grade average.

- The address process changes your college record by updating the address field.

- The grades process finishes its work first and writes its version of your college record back to the database. Now, your record has updated grades, but your address hasn’t changed.

- The address process finishes and rewrites its updated version of your record back to the database. This version has the new address, but it replaced the version that contains your grades! At this point, according to the database, you have no grade for this term. If we reverse the order and say that the address process wins the race, your grades will be updated but not your address. Depending on your success in the classroom, you might prefer one mishap over the other. From the operating system’s point of view, both alternatives are unacceptable, because both are incorrect and allow the database to become corrupted. A successful operating system can’t allow the integrity of the database to depend on luck or a random sequence of events.

Case 3: Deadlocks in Dedicated Device Allocation

The use of a group of dedicated devices, such as two audio recorders, can also deadlock the system. Remember that dedicated devices cannot be shared. Let’s say two administrators, Chris and Jay, from the local board of education are each running education programs and each has a process (P1 and P2, respectively) that will eventually need to use both audio recorders (R1 and R2) together to copy a school board hearing. The two recorders are available at the time when both make the request; the system’s allocation policy states that each recorder is to be allocated on an “as requested” basis. Soon the following sequence transpires:

- Chris’s process requests the recorder closest to the office, Recorder #1, and gets it.

- Jay’s process requests the recorder closest to the printer, Recorder #2, and gets it.

- Chris’s process then requests Recorder #2, but is blocked and has to wait.

- Jay’s process requests Recorder #1, but is blocked and the wait begins here, too. Neither Chris’s or Jay’s job can continue because each is holding one recorder while waiting for the other process to finish and release its resource—an event that will never occur according to this allocation policy.

Case 4: Deadlocks in Multiple Device Allocation

Deadlocks aren’t restricted to processes that are contending for the same type of device; they can happen when several processes request, and hold on to, several dedicated devices while other processes act in a similar manner, as shown in Figure 5.4.

Consider the case of an engineering design firm with three programs (P1, P2, and P3) and three dedicated devices: scanner, printer, and plotter. The following sequence of events will result in deadlock:

- Program 1 (P1) requests and gets the only scanner.

- Program 2 requests and gets the only printer.

- Program 3 requests and gets the only plotter.

- Now, Program 1 requests the printer but is blocked.

- Then, Program 2 requests the plotter but is blocked.

- Finally, Program 3 requests the scanner but is blocked and the deadlock begins. As was the case in the earlier examples, none of these programs can continue each is waiting for a necessary resource that’s already being held by another.

Modeling Deadlocks

In 1972, Richard Holt published a visual tool to show how the four conditions can be modeled using directed graphs. (We used modified directed graphs in figures shown previously in this chapter.) These graphs use two kinds of symbols: processes represented by circles and resources represented by squares. A solid line with an arrow that runs from a resource to a process, as shown in Figure 5.7(a), means that that resource has already been allocated to that process (in other words, the process is holding that resource). A dashed line with an arrow going from a process to a resource, as shown in Figure 5.7(b), means that the process is waiting for that resource. The directions of the arrows are critical because they indicate flow. If for any reason there is an interruption in the flow, then there is no deadlock. On the other hand, if cyclic flow is shown in the graph, (as illustrated in Figure 5.7(b), then there’s a deadlock involving the processes and the resources shown in the cycle.

In (a), Resource 1 is being held by Process 1 and Resource 2 is held by Process 2 in a system that is not deadlocked. In (b), Process 1 requests Resource 2 but doesn’t release Resource 1, and Process 2 does the same— creating a deadlock. (If one process released its resource, the deadlock would be resolved.)

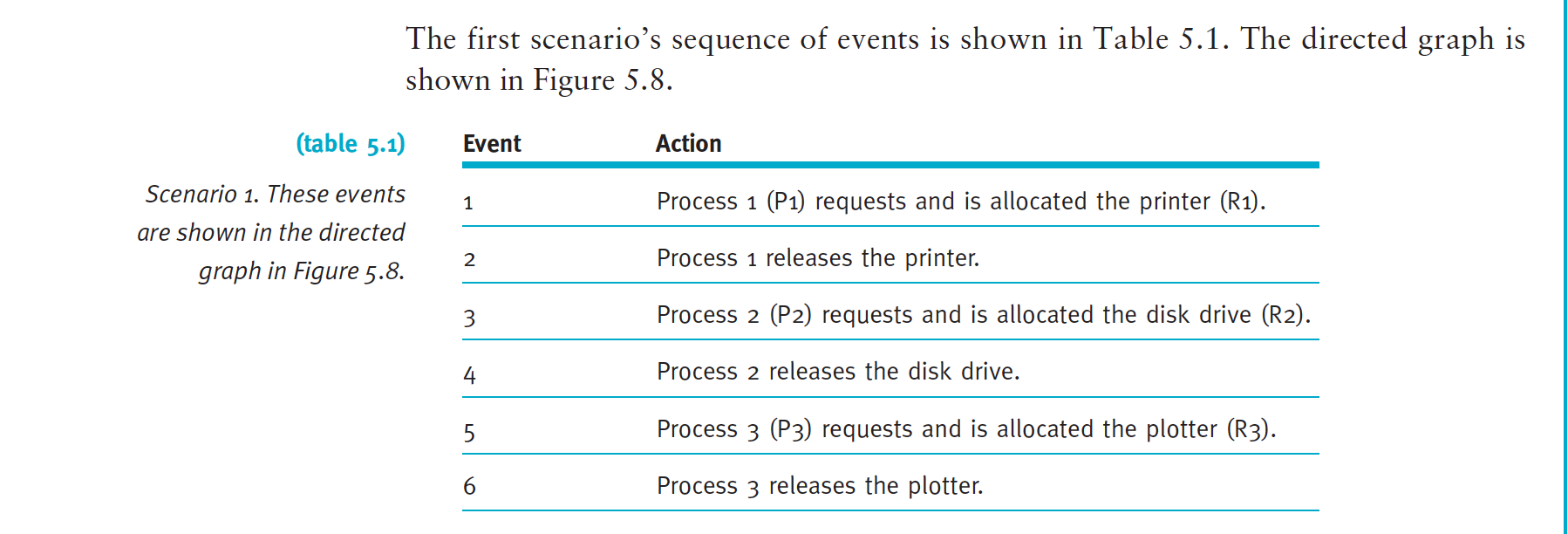

To practice modeling a system, the next three scenarios evaluate a system with three processes—P1, P2, and P3. The system also has three resources—R1, R2, and R3—each of a different type: printer, disk drive, and plotter. Because there is no specified order in which the requests are handled, we look at three different possible scenarios using graphs to help us detect any deadlocks.

Scenario 1: No Deadlock

Notice in the directed graph that no cycles were formed at any time. Therefore, we can safely conclude that a deadlock can’t occur with this system at this time, even if each process requests each of the other resources because every resource is released before the next process requests it.

Scenario 2 – Resource Holding

Now, consider a second scenario’s sequence of events shown in Table 5.2.

The progression of the directed graph is shown in Figure 5.9. A deadlock occurs because every process is waiting for a resource that is being held by another process, but none will be released without intervention.

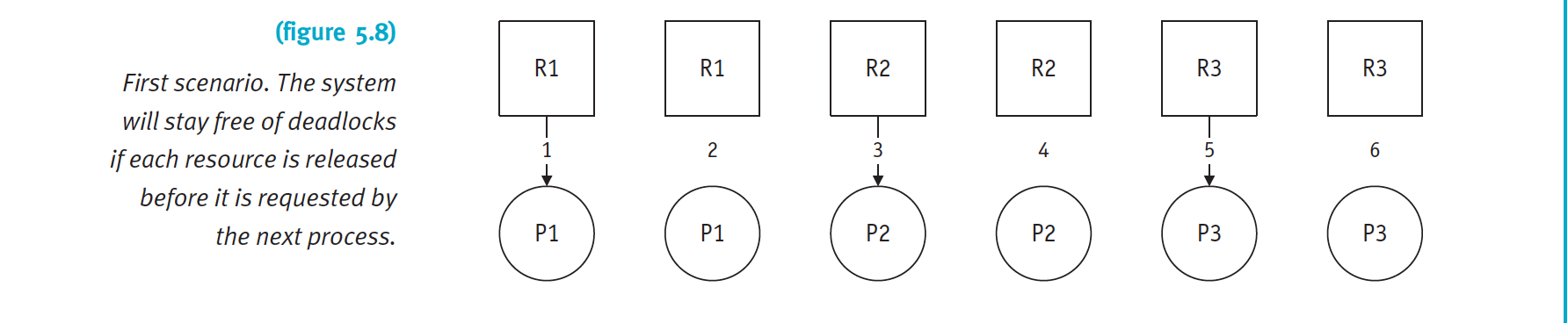

Scenario 3 – Deadlock Broken

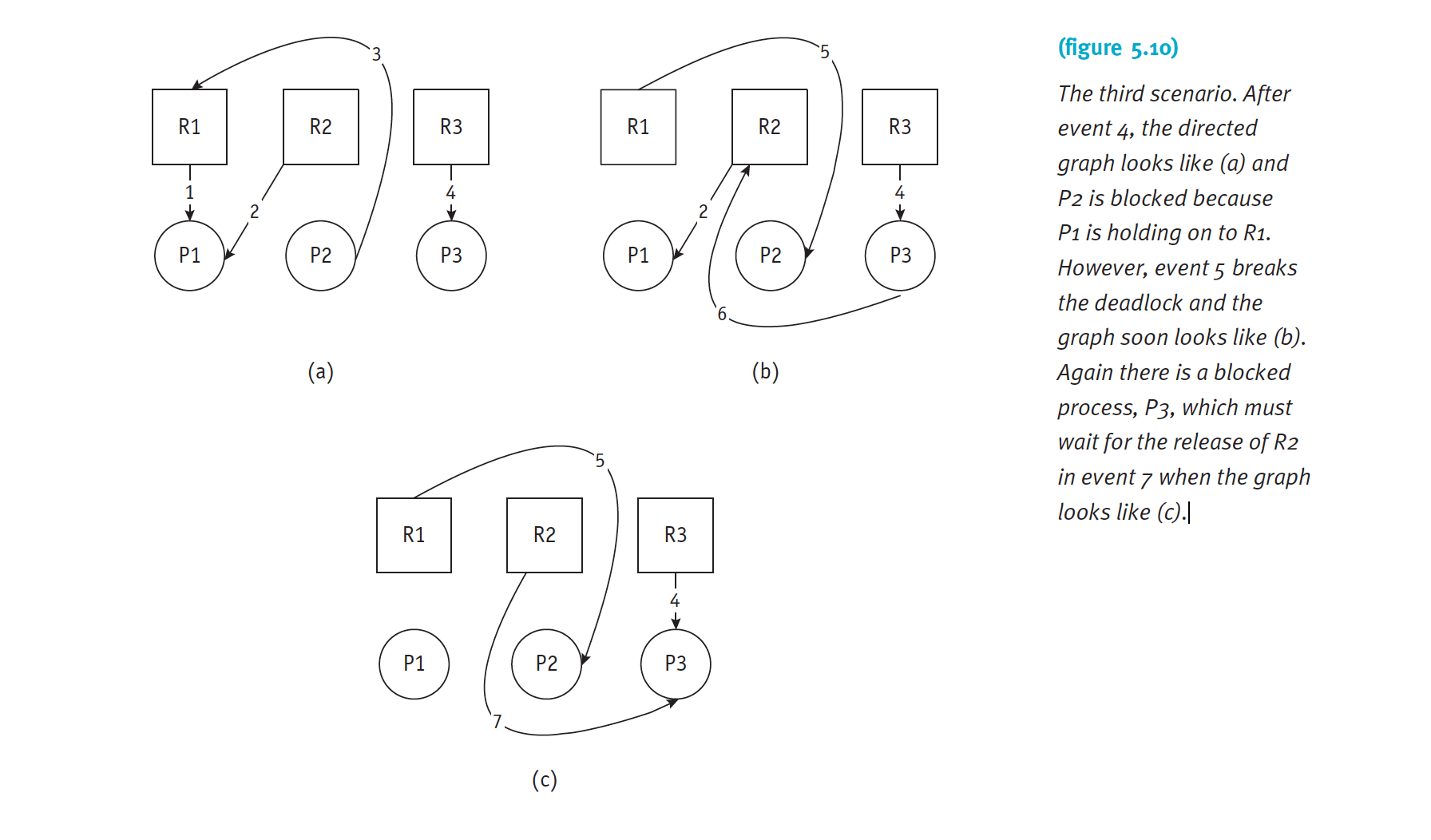

The third scenario is shown in Table 5.3. As shown in Figure 5.10, the resources are released before deadlock can occur.

The third scenario. After event 4, the directed graph looks like (a) and P2 is blocked because P1 is holding on to R1. However, event 5 breaks the deadlock and the graph soon looks like (b). Again there is a blocked process, P3, which must wait for the release of R2 in event 7 when the graph looks like (c).

Strategies for Handling Deadlocks

As these examples show, a system’s requests and releases can be received in an unpredictable order, which makes it very difficult to design a foolproof preventive policy. In general, operating systems use some combination of several strategies to deal with deadlocks:

- Prevent one of the four conditions from occurring: prevention.

- Avoid the deadlock if it becomes probable: avoidance.

- Detect the deadlock when it occurs: detection.

- Recover from it gracefully: recovery.

References

“understanding-operating-systems-7th”

comments powered by Disqus